Your conversational AI agent might pass every standard test, yet still fail at the one thing that truly matters: completing real tasks for real users.

It may answer isolated questions like “What’s my refund status?” with perfect accuracy. But in production, things fall apart. The agent forgets context, invokes the wrong tool, or fails to coordinate with the right internal component. The result? A task that starts but never finishes, leaving users confused and unsupported.

This breakdown happens because most testing still focuses on single-turn accuracy. But in complex systems where agents manage workflows, trigger tools, and interact with subsystems, prompt-by-prompt correctness isn’t enough. What matters is whether the entire system can stay on track and deliver end-to-end outcomes.

Galtea’s testing framework is built for exactly this. Our multi-turn, scenario-based evaluations measure task success, not just response quality. We simulate realistic user journeys where your agent must:

- Maintain and update evolving context

- Choose and sequence tools effectively

- Coordinate across internal processes or specialized components

- Stay aligned with the user’s goal from start to finish

With Galtea’s Conversation Simulator and Scenario Generator, you can uncover the breakdowns that traditional tests miss before they affect your users. Because in real-world deployments, passing a test is not enough. Completing the task is what counts.

Why Multi-Turn Testing Is Critical for Task Completion

Simple Q&A testing misses the real challenges that break task completion in multi-turn, conversational AI agents:

These failures often go undetected until production, where they directly impact user experience and business outcomes.

Introducing Galtea’s Conversation Simulator

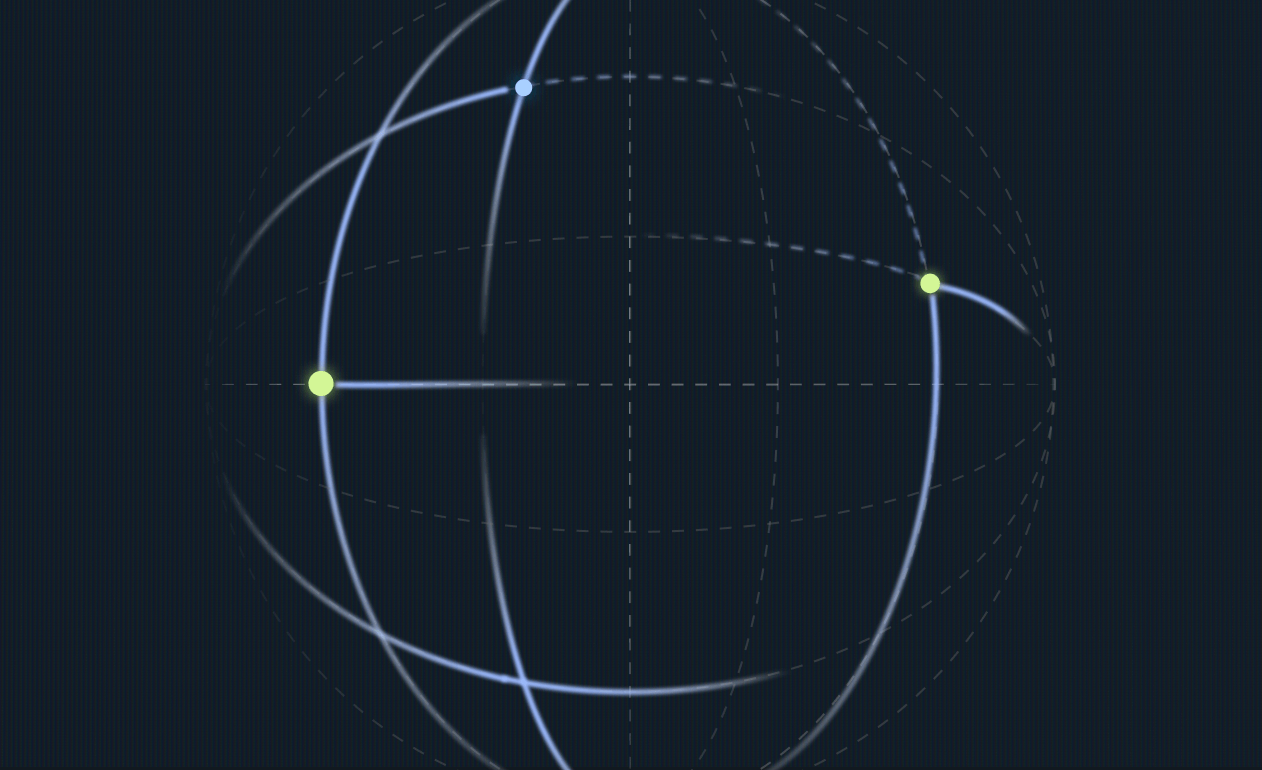

Galtea’s Conversation Simulator is a framework designed specifically for testing conversational AI systems in realistic dialogue scenarios. Instead of isolated Q&A pairs, it generates dynamic user messages that simulate authentic multi-turn conversations guided by structured test scenarios.

See It In Action

Before diving into the details, take a quick look at our demo below to see how the Conversation Simulator works in just under 2 minutes!

In this demo, you’ll learn how to:

- Set up dynamic test scenarios

- Run a simulation to see it in action

- Review the results and insights to improve your AI

How It Works

Each simulation runs your AI through a complete conversational flow, driven by:

- User goals: What the user wants to accomplish in the conversation

- Personas: The user’s background, communication style, and behavioral traits

- Scenarios: The specific context or situation that frames the interaction

- Success criteria: Clear definitions of what constitutes a successful conversation outcome

Key Use Cases

Dialogue Flow Testing

Ensure your AI produces coherent, logical conversations that feel natural to users.

Role Adherence

Verify your agent maintains its intended personality, expertise level, and communication style throughout extended interactions.

Task Completion

Test whether your AI can successfully guide users through complex, multi-step processes.

Robustness Testing

Discover how your AI handles unexpected user behavior, topic changes, or ambiguous requests.

Integration Ready: The simulator integrates seamlessly into CI/CD pipelines using the Galtea Python SDK, enabling continuous testing as your AI evolves.

Getting Started: The Agent Interface

Before diving into simulations, you’ll need to wrap your conversational AI using Galtea’s Agent interface. This simple Python class ensures your agent receives full conversation context and can be evaluated under realistic conditions

Running Your First Simulation

Once your agent is ready, running simulations is straightforward:

Evaluate Results:

What Success Looks Like

After running simulations, you’ll get detailed insights into your AI’s performance based on the metrics you choose to evaluate it on, and you will have visibility on:

Performance Insights:

- Turn-by-turn analysis: See exactly where conversations succeed or fail

- Success metrics: Track goal completion rates across different scenarios

- Context preservation: Monitor how well your AI maintains conversation state

- Performance trends: Identify patterns in failures across persona types or conversation lengths

Scenario Generator: Testing What Matters

Creating comprehensive test scenarios manually is time-consuming and often misses edge cases. Galtea’s Scenario Generator solves this by automatically creating diverse, product-specific test cases tailored to your use case.

Our generator creates scenarios that are both grounded in your product’s functionality and diverse enough to catch unexpected failure modes. The goal is comprehensive coverage without the manual effort of writing hundreds of test cases.

How It Works

When creating a test, simply select “Generated by Galtea” instead of uploading your own CSV. Our system analyzes your product information and generates scenarios like:

This scenario was generated for an HR Support Bot.

Customization Options

Custom Persona Focus

Steer scenario generation toward specific functionalities by providing a focus description:

"Focus on employees that need leave requests longer then 2 months."

Knowledge Base Integration [Coming Soon...]

This upcoming feature will allow you to upload your knowledge base to create even more tailored and grounded personas that reflect your actual use cases and interactions. We plan to launch this functionality in the near future, stay tuned!

Getting Started

Ready to move beyond single-turn testing? Book a demo with us: Galtea Demo

Multi-turn conversations are the future of AI interactions. Don’t let single-turn testing leave you blind to the failures that matter most to your users.