During the last months, Model Context Protocol (MCP) has become a hot topic in the tech sector. While many sources are celebrating the convenience and standardization that MCP brings to classical tool calling, many others are highlighting not only the gaps in the protocol but also the new security challenges that it introduces.

At Galtea, we’re constantly building new features to facilitate the integration of new frameworks and protocols. As part of this work, we want to outline how MCP increases the attack surface of your LLM-based products and how Galtea can help you address these issues.

(Very brief) Introduction to Model Context Protocol

Model Context Protocol establishes a standardized way for LLM-powered applications (clients) to interact with external tools provided by MCP servers. In this architecture, clients like Claude Desktop or Cursor incorporate LLMs and grant them access to tools from MCP servers, which typically run locally on a user’s computer. The protocol aims to create a consistent interface for tool interactions across different LLM applications, potentially simplifying development and enhancing capabilities.

Despite its standardization benefits, MCP faces substantial resistance due to security vulnerabilities, implementation challenges, and fundamental design limitations that raise concerns among developers creating LLM-based products.

Security Concerns Impeding MCP Adoption

Prompt Injection Vulnerabilities

One of the most significant barriers to MCP adoption involves security vulnerabilities, particularly related to prompt injection attacks. Already various research teams have documented how MCP clients like Cursor can fall victim to attack vectors such as “Hidden Malicious Instructions,” where the docstrings of tools are passed to LLMs as tool descriptions and can manipulate the LLM’s behavior.

Although these vulnerabilities aren’t inherent to the MCP protocol itself, they arise whenever tools are provided to LLM systems, which cause additional problems like “tool poisoning prompt injection attacks” and data exfiltration risks.

Rug pull attacks

Another critical security concern is the lack of proper version control for MCP servers which enables the so-called rug pull attacks. Currently, MCP clients cannot version control which MCP server version they’re using, creating a significant vulnerability. This means that even if a client validates a server’s security during initial configuration, the code inside can be changed later, potentially injecting malicious instructions after approval.

Cross-Server Exploitation

Security researchers have identified that malicious MCP servers can contain instructions that misuse other connected MCP servers. For example, if a client connects to both an email MCP server and a malicious server, the latter could direct the LLM to use the email server to send damaging emails from the user’s account. This cross-server exploitation represents a novel security risk that many developers are still learning to address.

Command injection vulnerabilities

MCP servers without robust control systems and guardrails, enable the execution of arbitrary code, since MCP implementations could pass arbitrary unescaped strings to system commands like os.system(), creating obvious security holes. Such implementation vulnerabilities, while theoretically fixable, create considerable risks for developers and deployers alike.

Data Exfiltration and Data Privacy Concerns

MCP makes it easier to accidentally expose sensitive data to third parties. Even without malicious actors and using only official MCP servers, users might unintentionally share sensitive information. For example, a user connecting Google Drive and Substack MCPs to draft content might have their assistant autonomously read relevant private documents and include unintended details.

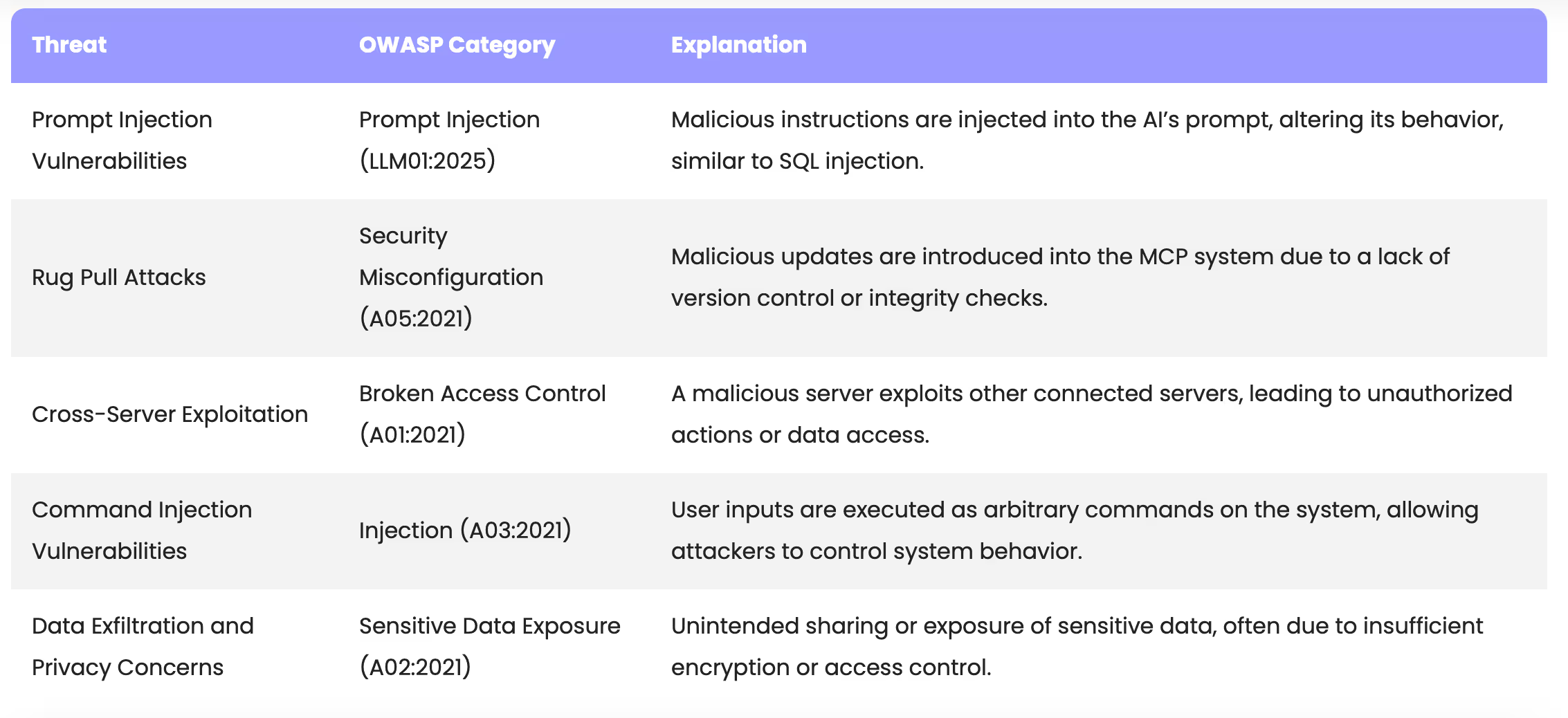

Here is a summary of all the threats with their corresponding OWASP category.

Galtea Platform for Security and Red Teaming

The technological landscape for constructing AI products is undergoing a rapid evolution, with a pace that is arguably unprecedented in any other field. This dynamic environment constantly introduces new technologies, frameworks, and protocols, which can inadvertently expand the attack surface and elevate security risks. At Galtea, we are committed to staying at the forefront of these advancements, ensuring that our platform incorporates the testing of the latest security threats to mitigate them before they are encountered in production.

Staying abreast of these rapid changes necessitates a proactive approach to security. This involves not only following the latest technologies but also investigating their security implications. Our team of experts is dedicated to continuously monitoring the threat landscape, identifying potential vulnerabilities in LLM based products, and implementing robust testing methodologies as a part of preventive countermeasures.

As a part of our dedication to ensuring that AI products are safe and trustworthy we have implemented these new threats to our red teaming test suite and benchmarked the security tests. During the following weeks, we will share a part of this work, to contribute to the LLM evaluation ecosystem.

If you are worried about the security of your AI products, at Galtea we can help you.

Book a demo with us: Galtea Demo