Building a generative AI project reveals a fundamental truth: a strong evaluation strategy is the key to success. This is a widely accepted principle among AI leaders, as scaling GenAI solutions in enterprise environments brings unique challenges. Here are just a few insights from top AI experts:

“Even with LLM-based products, you still need human input to manage quality… Evaluations aren’t simple [and] each app needs a custom approach.” — Greg Brockman, CEO at OpenAI

“Writing a good evaluation is more important than training a good model.” — Rishabh Mehrotra, Head of AI at Sourcegraph

“A team’s evaluation strategy determines how quickly and confidently a team can iterate on their LLM-powered product.” — Jamie Neuwirth, Head of Startups at Anthropic

“You must ensure that your models are reliable, that you address bias, that your solution is robust and explainable, and that you are transparent and accountable when using AI.” — Christian Westerman, Group Head of AI at Zurich Insurance

Scaling AI-powered products is a challenge, even for the most advanced teams. Generative AI has changed the way we interact with software, data, and decision-making processes by introducing natural language as a primary interface. This shift dramatically expands the input space, making it significantly harder to predict and evaluate outputs.

The Four Stages of a GenAI Project

To understand where enterprise teams struggle most in their GenAI projects, we need to break the process into its fundamental stages:

- Assessing the Use Case Potential. Before investing resources, teams must validate whether an LLM-powered solution aligns with business goals.

- Building a Functional MVP. The first working version of the system is developed, focusing on core use cases and minimal functionality.

- Iterating & Scaling the Product. Once an MVP is validated, the focus shifts to optimization, expanding functionalities, and refining performance.

- Deploying to Production. A fully developed GenAI system is deployed for real-world usage.

Most enterprise teams easily reach Stage 2 but encounter growing complexity when transitioning to Stage 3—where uncertainty, technical challenges, and risk concerns emerge. At this point, teams start asking:

- How do we ensure our LLM behaves reliably across different scenarios?

- How do we measure improvements between model versions?

- How do we prevent regressions without slowing down development?

- What are the key risks we need to mitigate before scaling?

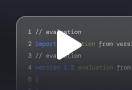

These questions arise because many teams fail to align their evaluation techniques with the stage of their project. Each phase requires a corresponding evaluation approach:

You cannot move forward without evolving your evaluation approach. This realization is why, in 2025, we are seeing enterprise teams finally succeeding in getting their GenAI products to production.

Building Trust in Artificial Intelligence

At Galtea, we help teams transition from MVPs to production with confidence. We provide automated standardized testing, robust scoring mechanisms, and comprehensive traceability, ensuring every iteration is backed by key performance metrics. This allows teams to make informed decisions and deploy AI systems they can trust.

If your team is struggling with evaluation at Stage 3, we can help.

Book a demo with us: Galtea Demo